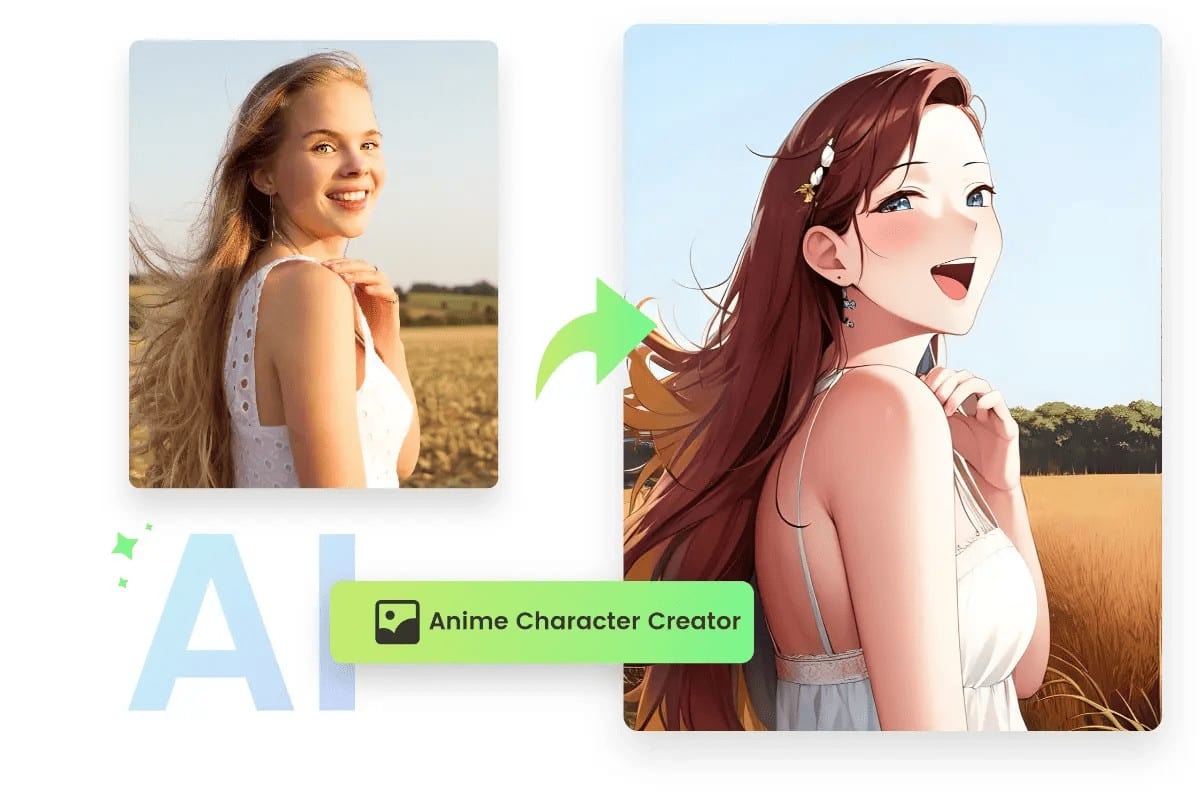

Anime to Real Life Conversion Is Not Only a Dream Nowadays.

Ever found yourself wondering what Goku would look like if you happened upon him on a street? Perhaps you find the real-life characteristics of Sailor Moon’s huge eyes and famous facial expressions interesting. You are not the only one. Everywhere you find IT nerds, artists, and fans dreaming about crossing fictional realms and seeing their anime faves realized in flesh and bone. Thanks to an anime to real life converter, those wacky notions are now genuinely feasible with developments in artificial intelligence.

These instruments have rocked the fan base. Social media feeds abound in reimagined fan art, memes, and side-by-side comparisons. You’ll see forums arguing a converted Naruto’s haircut or challenging the uncanny valley effect when an artificial intelligence takes things a little too literally. What started off as an uncontrolled experiment has rapidly evolved into a seductive combination of ideas and technology.

What gives these anime to real life converters their ability?

Let’s draw back the curtains. Generative adversarial networks (GANs) are the major players in these developments. Imagine them as a pair of artificial intelligence models locked in a creative tug-of- war: one creates visuals, the other judges them. The first, known as the generator, works to replicate anime picture faces realistically. The second, the discriminator, chooses whether they seem convincingly real or not. This back-and- forth polishes the result, transforming it from embarrassing to remarkable.

Two well-known names here are CycleGAN and StyleGAN, both of which have taste all their own. CycleGAN shines at translating images from one style to another—think of making Van Gogh’s sunflowers look like modern photos or, in this example, transforming a hand-drawn anime eye into something blinks and glimmers just like yours. StyleGAN addresses facial characteristics, skin texture, and even subdued lighting—producing effects that occasionally verge on disturbingly real.

These models learn on hundreds or even millions of faces and artwork styles under the hood. They pick up facial geometry, bat patterns, and the overdone elements that define anime so famously. The outcome is a model that can mix true human anatomy’s logic with the DNA of a cartoon face.

Data: More Than One and Zero

Without showing it what humans look like, you cannot teach an algorithm to transform a blue-haired fantasy into a believable barista. Everything counts as the raw ingredient. Large picture databases—like Flickr-Faces-HQ (FFHQ) or celebrity photo archives—become AI textbooks. For anime, databases like Danbooru offer a complete library with categorizing for anything from swordsmen to magical heroines.

The technique consists of aligning, cropping, and balancing images such that translation makes sense pixel by pixel. Should the dataset be skewed or overly small, the generated photos could fall short. Your result will be faces either lacking essential traits or excessively generic. In comes quality; out comes quality.

Recall: here privacy and copyright discussions loom big. Using fan art sets or celebrity images raises strong ethical questions. It’s easy to forget the difficult trip those images traveled to reach the mind of the neural network while you stare at the outputs.

Not Every Conversion Is Made Equal

Running a spiky-haired shonen hero across two distinct platforms will produce quite varied effects. Some artificial intelligence algorithms grasp subtleties and can ground large, expressive eyes in reality while capturing their playfulness. Others go too far, giving you something right out of a dreamscape or even a thriller.

Some sites, such as Artbreeder, let users change genes—think of “add a bit more jawline” or “slimmer eyebrows”—until the face feels correct. This collaborative technique allows communities to help to define how familiar characters are reinterpreted. Others adopt a more hands-off approach whereby the AI works and you get what you get—a process both fascinating and laugh-out-loud entertaining.

The best photo to anime converters nail something? They map traits onto human standards while yet preserving the core of an anime character. You will feel the artifice right away if the nose gets too pointed or the eyes are too large. All appeal disappears if everything is “corrected” into blandness.

The mix of art and science: when human creativity meets artificial intelligence

Not replaced; rather, artists are being energized. Many digital artists base their work on artificial intelligence then modify outcomes in Photoshop or Procreate. They provide personal flare, correct weird ears, change skin tones, or bring details AI would overlook back in place. AI offers a rough clay model; human hands bring soul and detail.

Professional studios have taken an eye toward several anime to real world converters. Imagine presenting a live-action adaptation where, thanks to neural networks, every basic character already has a hyper-realistic concept image. In casting and prosthetic testing alone, it may save a fortune and save time by feeding imagination.

Remember cosplay as well. These converters help aspirant cosplayers see how their outfit would look on a real face. Early comments and better preparation assist their enthusiasm to soar.

Challenges: Not all sunshine and butterflies

Though at first seem beautiful, progress is untidy under the surface. Anime exaggerates all—hair color, eye dimensions, unearthly shapes. AI has to play about with the numbers between reasonable and obvious.

Especially when input photographs feature avant-garde shading effects, skin tones can get handled strangely. Certain artificial intelligence engines handle lighter or darker characters with different degrees of accuracy, which begs issues of inclusiveness. And avoid starting me on hair. Odd-colored hair stump several networks by combining magenta and green into embarrassing pastels not found in real life.

One also runs across the spectre of the eerie valley. Certain artificial intelligence looks seem almost perfect yet feel strange. Perhaps the lips slightly bends off or the eyes seem to be shiny in an odd way. This occurs when anime sometimes backfires when converting those decisions into flesh and blood often disregards anatomical standards.

Anime’s Future Translation Into Real Life artificial intelligence conversion

Major venues keep changing. Projects open-source like DeepAnime and GANPaint are advancing quickly. Every update gives facial features and expression handling more subtlety. AI models capable of comprehending emotion, cultural background, and even vocal patterns could abound in the future. Imagine AI creating a whole anime scene in realistic live-action style one day—full video conversions.

Also beginning to make use of this technology are companies. Fashion houses commission AI-generated models inspired by anime stereotypes for limited-edition introductions or marketing campaigns. Streaming behemoths discuss how to leverage these conversions to audition digital performers for animated-to- live-action transitions.