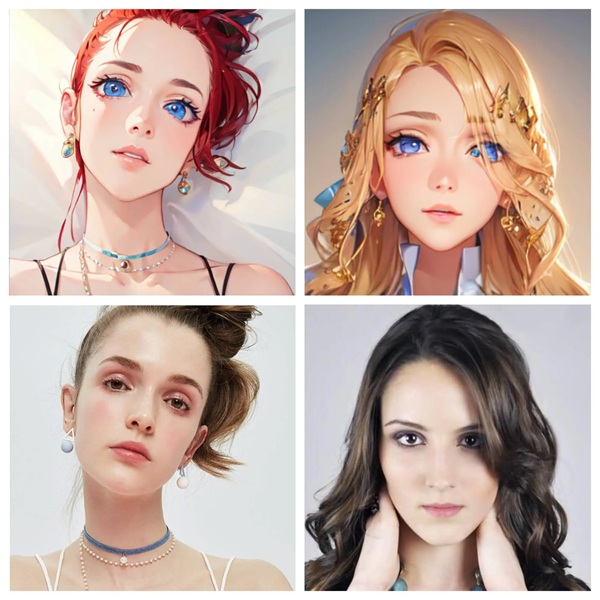

Imagine an artist using the wacky technique to turn photo to anime—a blend of magic and science that turns daily images into the whimsical, exaggerated characters we love in anime. This procedure could seem as smooth handwork. Still, behind every striking picture is a system of computers laboring to decipher complex art forms. Simultaneously, the choice and quality of the training data significantly shape these digital paintings.

Many wonder how computers develop an eye for style. Imagine a chef learning recipes by tasting multiple dishes instead of consulting a cookbook. AI models explore thousands of photographs first hand. Every image expresses and tastes differently. The models pick up recurring trends such the big eyes, unique haircuts, and dramatic facial expressions common to anime. Sometimes the procedure seems more like solving a jigsaw without a final picture in mind, even if it replics human observation.

Let us explore the scientific basis of this metamorphosis. The spine of these systems is neural networks. Consider them as digital brains able to pick up minute visual signals. Given a fresh portrait, the model remembers related stored knowledge from her training sessions. There is no magic about the process. It depends on statistical trends deduced from hundreds of thousands of cases. Years of computer vision and image processing research have helped this approach to be refined.

The art form changes greatly depending on what the machine has seen. Imagine an artificial intelligence given almost 100,000 illustrated scenes; its output looks somewhat different from one taught on a smaller or less varied set. The AI’s output mirrors its training input, just as a painter’s work responds to the several brushes and colors at hand. While some models could provide a fresh, modern design, others might copy a classic anime look evocative of early Japanese animations. These variations are driven directly by the variability in training images.

Getting the correct images for training is like assembling a jigsaw puzzle in a packed attic: it takes organization and sorting. Many academics labor hours selecting data from animation companies, art venues, and internet archives. In one recent study, a data set of roughly 50,000 well chosen photographs produced notably better style replication than a randomly assembled collection. Having strong, well-organized input definitely gives one power. The artificial intelligence picks colors, brushwork, and even the emotional punches characteristic of anime narrative.

If you look closely, this technology is typically approached in two ways. One employs style transfer—that which others refer to as Algorithics using this approach overlay aesthetically pleasing clues from one image onto another. The other method teaches a model end-to-end on pairs of input and anime-style output images. Often the second approach offers a more constant, smoother change. Those working in the field joke that the training data is like a harsh school teacher—it forces a structure the model eventually learns to follow.

Have you ever pondered why a system could occasionally seem exactly right? It boils down to the nature of its learning. Many times, the available data to teach these algorithms is the limiting component in reaching that idealized style change. While a messy set could leave behind unclear or misshaped elements, a well-curated collection of photographs can provide remarkable outcomes. For instance, a study contrasting two artificial intelligence models revealed that one trained on more than 100,000 photographs generated a 35% greater satisfaction percentage among users than one with just 10,000 images.

Computers segregate shape, color, and shading into clean channels when they translate a picture into anime style. In this procedure, convolutional neural networks are absolutely central. They dissect the input picture into layers of abstract elements including textures and edges. From those layers, the technology generates an anime-style rendition. The interaction of these layers is somewhat similar to stew ingredient mixing. Every additional ingredient adds good taste and aroma till the last meal is substantial and fulfilling.

Sometimes the change sparks philosophical arguments regarding creative legitimacy. Critics of artificial intelligence contend that its approach is merely imitation of human creative ability. They are reminded of how the soul of a famous painting cannot be replicated. Actually, the technology makes use of patterns seen in many masterpieces and contemporary designs. Although its product can be exactly like style, it cannot reproduce the personal inspiration derived from an artist’s experience.

When hobbyists play with these instruments, there is an interesting turn-about. Laughing during a family gathering, a buddy once joked, “My aunt’s portrait instantly went from family photo to anime superstar.” Many tools let anyone upload a nice picture and see art change in front of eyes in a few seconds. The user-friendly character of these programs has generated a lot of enthusiasm in anime transformation. It transforms the art from some unreachable secret into a daily experiment anybody may try.

Training these models calls both major hardware and software support. Modern systems sometimes process vast banks of images using clusters of GPUs. You need a lot of computational capability. Researchers point out that processing large amounts of training data might take days or weeks even with state-of-the-line tools. The numbers backing such initiatives are astounding. Some projects, for example, consume tens of terabytes of data spread over several server farms. Hence, the path from input to final product is a marathon instead of a sprint.

The debate is not just technical. Learning from pre-existing artwork raises ethical questions for machines. Many musicians worry about safeguarding their original works. It’s like discovering your handwritten letter jumbled in with someone else’s fantastic novel. Some projects use a pay-for- access approach, whereby artists or copyright holders are paid when their work is used, therefore addressing these issues. Along with encouraging more artists to participate, this compensating strategy produces richer, more varied training sets.

Look, human ingenuity runs parallel with technology quite a bit. Systems translate images into anime not only to copy an image. They mix and match visual styles learnt from many sources, picking bits and parts. Some enthusiasts say these outcomes can inspire fresh artistic trends. The interaction between digital innovation and sketch pads can soon result in joint projects between programmers and artists. A well-trained model can spark a fresh wave in anime art, much as mixed genres produce hits in music or fashion.